|

http://img.youtube.com/vi/nKKpF-CVAUE/0.jpg

Search Buzz Video Recap: Search Engine Land Dropped In Google, Zero Search Results, Thanksgiving Update & More https://ift.tt/2RoD78V This week in search I covered how this morning Google's removed Search Engine Land from their index this morning by accident. Google started showing zero search results again and it looks like it will stick. Google may have done a big algorithm update on Thanksgiving day... SEO via Search Engine Roundtable https://ift.tt/1sYxUD0 November 30, 2018 at 08:07AM

0 Comments

https://www.searchenginejournal.com/wp-content/uploads/2018/11/Who-Should-Win-as-the-Search-Personality-of-Personalities-–-SEJ-Survey-Says.png

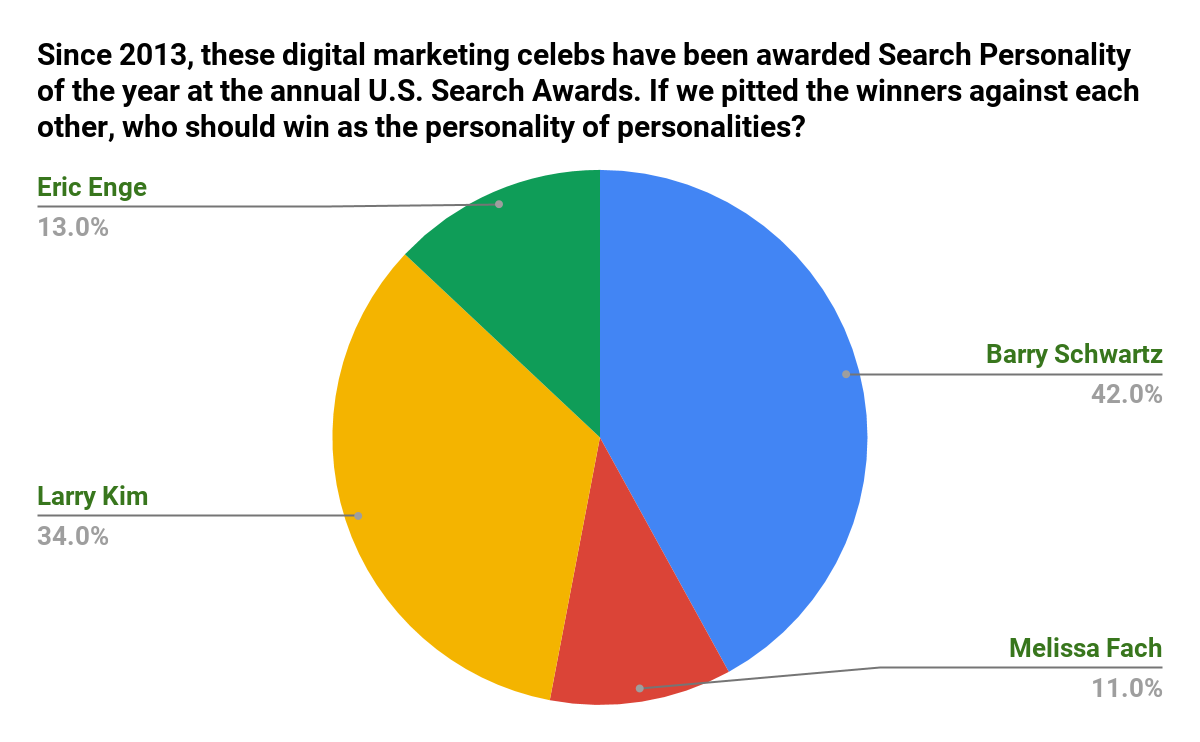

Who Should Win as the U.S. Search Personality of Personalities? [POLL] by @A_Ninofranco https://ift.tt/2E8qhsk There is so much to like about the SEO community. As our Executive Editor Danny Goodwin puts it:

The community is lucky to have industry thought leaders who contribute to the overall growth of the space by providing valuable insights derived from their own experiences. Since 2013, several digital marketing celebrities have been awarded Search Personality of the year at the annual U.S. Search Awards – a competition that celebrates the very best in SEO, PPC, digital and content marketing in the U.S. The Search Personality of the year award recipients include:

If we pitted the winners against each other, who should win as the personality of personalities? We asked our Twitter community to find out. Who Should Win as the Search Personality of Personalities?Here are the results from this #SEJSurveySays poll question. According to SEJ’s Twitter audience:

NOTE: Past winners Marty Weintraub of Aimclear and Duane Forrester of Yext weren’t forgotten. Twitter only let us put up to four poll choices so we chose the four most recent winners. One thing all these great personalities have in common? Ties to Search Engine Journal. Eric Enge, General Manager of Perficient Digital (and the founder and CEO of Stone Temple, which was acquired by Perficient in July 2018) wrote a chapter of our Complete SEO Guide: What to Do When Things Go Wrong in SEO. Melissa Fach is the Social and Community Manager at Pubcon, the blog editor at SEMrush, and works as a consultant with a few select clients. She is also a former managing editor of SEJ from 2011-2013. Larry Kim is the CEO of Mobile Monkey and founder of WordStream. Nearly 100 posts he wrote have been published on SEJ since 2010. Barry Schwartz is the CEO of RustyBrick, the founder of the Search Engine Roundtable, and the News Editor at Search Engine Land. What people might not remember is that from 2005-2006 he was a news writer for SEJ. While Schwartz may be the most recent winner of the U.S. Search Personality winner – and the winner of our poll pitting the four most recent winners against each other – it’s important to remember that everyone mentioned here is a winner. They’re all awesome at what they do. All six of these brilliant people have helped countless people in our industry over the years through speaking at conferences, writing for top blogs and publications, and sharing their knowledge and expertise with our community in other ways. So, here’s a big THANK YOU to all six of you – Marty, Duane, Larry, Eric, Melissa, and Barry – from all of us at Search Engine Journal. The Leading SEO Experts, Publications & Conferences You Need to KnowWant to learn more about search engine optimization (SEO)? Have Your SayWho do you think deserves to be called the “Search Personality of Personalities?” Tag us on social media to let us know. Be sure to have your say in the next survey – check out #SEJSurveySays on Twitter for future polls and data. Image Credit Chart created by Shayne Zalameda Subscribe to SEJGet our daily newsletter from SEJ's Founder Loren Baker about the latest news in the industry! SEO via Search Engine Journal https://ift.tt/1QNKwvh November 30, 2018 at 07:39AM

https://ift.tt/2O36xs4

PSA: Not All Sites Were Moved Yet To Google Mobile First Indexing https://ift.tt/2KHTYRJ I keep getting asked if Google is now done migrating sites to the mobile first indexing process. The answer is no, there are many sites, including this one, that has not been migrated yet. Google said many times it can take years and years for it to happen. SEO via Search Engine Roundtable https://ift.tt/1sYxUD0 November 30, 2018 at 07:02AM

https://ift.tt/2SiEWUU

Bing Search Spam Fighter: Interesting Reality Check From SEOs https://ift.tt/2FPERXA Frédéric Dubut is a Bing spam fighter, he works on search quality and safety at Bing - we quoted him here a few times. He attended the TechSEO Boost search conference yesterday and posted on Twitter "I always find it fascinating to hear the "reality check" from SEO folks." SEO via Search Engine Roundtable https://ift.tt/1sYxUD0 November 30, 2018 at 06:53AM

https://ift.tt/2SoD39v

The importance of understanding intent for SEO https://ift.tt/2zyojxz Search is an exciting, ever-changing channel. Algorithm updates from Google, innovations in the way we search (mobile, voice search, etc.), and evolving user behavior all keep us on our toes as SEOs. The dynamic nature of our industry requires adaptable strategies and ongoing learning to be successful. However, we can’t become so wrapped up in chasing new strategies and advanced tactics that we overlook fundamental SEO principles. Recently, I’ve noticed a common thread of questioning coming from our clients and prospects around searcher intent, and I think it’s something worth revisiting here. In fact, searcher intent is such a complex topic it’s spawned multiple scientific studies (PDF) and research (PDF). However, you might not have your own internal research team, leaving you to analyze intent and the impact it has on your SEO strategy on your own. Today, I want to share a process we go through with clients at Page One Power to help them better understand the intent behind the keywords they target for SEO. Two questions we always ask when clients bring us a list of target keywords and phrases are:

These questions drive at intent and force us, and our clients, to analyze audience and searcher behavior before targeting specific keywords and themes for their SEO strategy. The basis for any successful SEO strategy is a firm understanding of searcher intent. Types of searcher intentSearcher intent refers to the “why” behind a given search query — what is the searcher hoping to achieve? Searcher intent can be categorized in four ways:

Categorizing queries into these four segments will help you better understand what types of pages searchers are looking for.

Informational intentPeople entering informational queries seek to learn information about a subject or topic. These are the most common types of searches and typically have the largest search volumes. Informational searches also exist at the top of the marketing funnel, during the discovery phase where visitors are much less likely to convert directly into customers. These searchers want content-rich pages that answer their questions quickly and clearly, and the search results associated with these searches will reflect that. Navigational intentSearchers with navigational intent already know which company or brand they are looking for, but they need help with navigation to their desired page or website. These searches often involve queries that feature brand names or specific products or services. These SERPs typically feature homepages, or specific product or service pages. They might also feature mainstream news coverage of a brand. Commercial intentCommercial queries exist as a sort of hybrid intent — a mix of informational and transactional. These searches have transactional intent. The searcher is looking to make a purchase, but they are also looking for informational pages to help them make their decision. The results associated with commercial intent usually have a mix of informational pages and product or service pages. Transactional intentTransactional queries have the most commercial intent as these are searchers looking to make a purchase. Common words associated with transactional searches include [price] or [sale]. Transactional SERPs are typically 100 percent commercial pages (products, services and subscription pages). Categorizing keywords and search queries into these four areas makes it easier to understand what searchers want, informing page creation and optimization. Optimizing for intent: Should my page rank there?With a clear understanding of the different types of intent, we can dive into optimizing for intent. When we get a set of target keywords from a client, the first thing we ask is, “Should your website be ranking in these search results?” Asking this question leads to other important questions:

Before you can optimize your pages for specific keywords and themes, you need to optimize them for intent.

The best place to start your research is the results themselves. Simply analyzing the current ranking pages will answer your questions about intent. Are the results blog posts? Reviews or “Top 10” lists? Product pages? If you scan the results for a given query and all you see is in-depth guides and resources, the chances that you’ll be able to rank your product page there are slim to none. Conversely, if you see competitor product pages cropping up, you know you have legitimate opportunity to rank your product page with proper optimization. Google wants to show pages that answer searcher intent, so you want to make sure your page does the best job of helping searchers achieve whatever they set out to do when they typed in their query. On-page optimization and links are important, but you’ll never be able to compete in search without first addressing intent. This research also informs content creation strategy. To rank, you will need a page that is at least comparable to the current results. If you don’t have a page like that you will need to create one. You can also find (a few) opportunities where the results currently don’t do a great job of answering searcher intent, and you could compete quickly by creating a more focused page. You can even take it a layer deeper and consider linking intent — is there an opportunity here to build a page that can act as a resource and attract links? Analyzing intent will inform the other aspects of your SEO campaign. Asking yourself if your current or hypothetical page should rank in each SERP will help you identify — and optimize for — searcher intent. Answering intent: What will this accomplish?A key follow-up question we also ask is, “What will ranking accomplish?” The simplified answer we typically get is “more traffic.” But what does that really mean? Depending on the intent associated with a given keyword, that traffic could lead to brand discovery, authority building, or direct conversions. You need to consider intent when you set expectations and assign KPIs.

Keep in mind that not all traffic needs to convert. A balanced SEO strategy will target multiple stages of the marketing funnel to ensure all your potential customers can find you — building brand affinity is an important part of earning traffic in the first place, with brand recognition impacting click-through-rate by +2-3x! Segmenting target keywords and phrases based on intent will help you identify and fill any gaps in your keyword targeting. Ask yourself what ranking for potential target keywords could accomplish for your business, and how that aligns with your overall marketing goals. This exercise will force you to drill down and really focus on the opportunities (and SERPs) that can make the most impact. Searcher intent informs SEOSearch engine optimization should start with optimizing for intent. Search engines continue to become more sophisticated and better at measuring how well a page matches intent, and pages that rank well are pages that best answer the query posed by searchers. To help our clients at Page One Power refocus on intent, we ask them the following questions:

Ask yourself these same questions as you target keywords and phrases for your own SEO campaign to ensure you’re accounting for searcher intent. Opinions expressed in this article are those of the guest author and not necessarily Search Engine Land. Staff authors are listed here. About The AuthorAndrew Dennis is a Content Marketing Specialist at Page One Power. Along with his column here on Search Engine Land, Andrew also writes about SEO and link building for the Page One Power blog, Linkarati. When he's not reading or writing about SEO, you'll find him cheering on his favorite professional teams and supporting his alma mater the University of Idaho. SEO via Search Engine Land https://ift.tt/1BDlNnc November 30, 2018 at 06:46AM Google Posts Large AMP Indexing FAQs For SEOs & Webmasters https://ift.tt/2RqOnBU Dong-Hwi Lee, a Googler, posted in the Google Webmaster Help forums a large FAQs around indexing questions related to AMP. This is a very comprehensive FAQ around the topic and I thought it would be useful to bring more attention to this document to the community. SEO via Search Engine Roundtable https://ift.tt/1sYxUD0 November 30, 2018 at 06:35AM

https://ift.tt/2Qqo29G

How to prepare for a JS migration https://ift.tt/2DRjhzi An 80 percent decrease in organic traffic is the nightmare of every business. Unfortunately, such a nightmarish scenario may become reality if a website migration is done incorrectly; instead of improving the current situation it eventually leads to catastrophe.

Source: http://take.ms/V6aDv There are many types of migrations, such as changing, merging or splitting the domains, redesigning the website or moving to a new framework. Web development trends are clearly showing that the use of JavaScript has been growing in recent years and JavaScript frameworks are becoming more and more popular. In the future, we can expect that more and more websites will be using JavaScript.

Source: https://httparchive.org/reports/state-of-javascript As a consequence, SEOs will be faced with the challenge of migrating to JavaScript frameworks. In this article, I will show you how to prepare for a migration of a website built with a static HTML to a JavaScript framework. Search engines vs. JavaScriptGoogle is the only search engine that is able to execute JavaScript and “see” the elements like content and navigation even if they are powered by JavaScript. However, there are two things that you always need to remember when considering changes to a JS framework. Firstly, Google uses Chrome 41 for rendering pages. This is a three-year old browser that does not support all the modern features needed for rendering advanced features. Even if they can render JS websites in general, it may happen that some important parts will not be discovered due to the reliance on technology that Google can’t process. Secondly, JS executing is an extremely heavy process so that Google indexes JS websites in two waves. The first wave gets the raw HTML indexed. In the case of JS-powered websites, this translates to almost an empty page. During the second wave, Google executes JavaScript so they can “see” the additional elements loaded by JS. Then they are ready for indexing the full content of the page.

The combination of these two elements makes it so that if you decide to change your current website to the JavaScript framework, you always need to check if Google can efficiently crawl and index your website. Migration to a JS framework done rightSEOs may not like JavaScript, but it doesn’t mean that its popularity will stop growing. We should get prepared as much as we can and implement the modern framework correctly. Below you will find information that will help you navigate through the process of changing the current framework. I do not provide “ready-to-go” solutions because your situation will be the result of different factors and there is no universal recipe. However, I want to stress the elements you need to pay particular attention to. Cover the basics of standard migrationYou can’t count on the miracle that Google will understand the change without your help. The whole process of migration should be planned in detail. I want to keep the focus on JS migration for this article, so if you need detailed migration guidelines, Bastian Grimm has already covered this.

Source: Twitter Understand your needs in terms of serving the content to GoogleThis step should be done before anything else. You need to decide on how Google will receive the content of your website. You have two options: 1. Client-side rendering: This means that you are totally relying on Google for rendering. However, if you go for this option you agree on some inefficiency. The first important drawback of this solution is the deferred indexing of your content due to the two waves of indexing mentioned above. Secondly, it may happen that everything doesn’t work properly because Chrome 41 is not supporting all the modern features. And last, but not least, not all search engines can execute JavaScript, so your JS website will seem empty to Bing, Yahoo, Twitter and Facebook.

Source: YouTube 2. Server-side rendering: This solution relies on rendering by an external mechanism or the additional mechanism/component responsible for the rendering of JS websites, creating a static snapshot and serving it to the search engine crawlers. At the Google I/O conference, Google announced that serving a separate version of your website only to the crawler is fine. This is called Dynamic Rendering, which means that you can detect the crawler’s User Agent and send the server-side rendered version. This option also has its disadvantages: creating and maintaining additional infrastructure, possible delays if a heavy page is rendered on the server or possible issues with caching (Googlebot may receive a not-fresh version of the page).

Source: Google Before migration, you need to answer if you need option A or B. If the success of your business is built around fresh content (news, real estate offers, coupons), I can’t imagine relying only on the client-side rendered version. It may result in dramatic delays in indexing so your competitors may gain an advantage. If you have a small website and the content is not updated very often, you can try to leave it as client-side rendered, but you should test before launching the website if Google really does see the content and navigation. The most useful tools to do so are Fetch as Google in GSC and the Chrome 41 browser. However, Google officially stated that it’s better to use Dynamic Rendering to make sure they will discover frequently changing content correctly and quickly. Framework vs. solutionIf your choice is to use Dynamic Rendering, it’s time to answer how to serve the content to the crawlers. There is no one universal answer. In general, the solution depends on the technology AND developers AND budget AND your needs. Below you will find a review of the options you have from a few approaches, but the choice is yours:

Probably I’d go for pre-rendering, for example with prerender.io. It’s an external service that crawls your website, renders your pages and creates static snapshots to serve them if a specific User Agent makes a request. A big advantage of this solution is the fact that you don’t need to create your own infrastructure. You can schedule recrawling and create fresh snapshots of your pages. However, for bigger and frequently changing websites, it might be difficult to make sure that all the pages are refreshed on time and show the same content both to Googlebot and users.

If you build the website with one of the popular frameworks like React, Vue, or Angular, you can use one of the methods of Server Side Rendering dedicated to a given framework. Here are some popular matches: Using one of these frameworks installed on the top of React or Vue results in creating a universal application, meaning that the exact same code can be executed both on the server (Server Side rendering) and in the client (Client Side Rendering). It minimizes the issues with a content gap that you could have if you rely on creating snapshots and heavy caching, as with prerender.

It may happen that you are going to use a framework that does not have a ready-to-use solution for building a universal application. In this case, you can go for building your infrastructure for rendering. It means that you can install a headless browser on your server that will render all the subpages of your website and create the snapshots that are served to the search engine crawlers. Google provides a solution for that – Puppeteer is a library that does a similar job as prender.io. However, everything happens on your infrastructure.

For this, I’d use hybrid rendering. It’s said that this solution provides the best experience both to users and the crawlers because users and crawlers receive a server-side rendered version of the page on the initial request. In many cases, serving an SSR page is faster for users rather than executing all the heavy files in the browser. All subsequent user interactions are served by JavaScript. Crawlers do not interact with the website by clicking or scrolling so it’s always a new request to the server and they always receive an SSR version. Sounds good, but it’s not easy to implement.

Source: YouTube The option that you choose will depend on many factors like technology, developers and budgets. In some cases, you may have a few options, but in many cases, you may have many restrictions, so picking a solution will be a single-choice process. Testing the implementationI can’t imagine a migration without creating a staging environment and testing how everything works. Migration to a JavaScript framework adds complexity and additional traps that you need to watch out for. There are two scenarios. If for some reason you decided to rely on client-side rendering, you need to install Chrome 41 and check how it renders and works. One of the most important points of an audit is checking errors in the console in Chrome Dev Tools. Remember that even a small error in processing JavaScript may result in issues with rendering. If you decided to use one of the methods of serving the content to the crawler, you will need to have a staging site with this solution installed. Below, I’ll outline the most important elements that should be checked before going live with the website: 1. Content parity You should always check if users and crawlers are seeing exactly the same content. To do that, you need to switch the user agents in the browser to see the version sent to the crawlers. You should verify the general discrepancies regarding rendering. However, to see the whole picture you will also need to check the DOM (Document Object Model) of your website. Copy the source code from your browser, then change the User Agent to Googlebot and grab the source code as well. Diffchecker will help you to see the differences between the two files. You should especially look for the differences in the content, navigation and metadata. An extreme situation is when you send an empty HTML file to Googlebot, just as Disqus does.

Source: Google This is what their SEO Visibility looks like:

Source: http://take.ms/Fu3bL They’ve seen better days. Now the homepage is not even indexed. 2. Navigation and hyperlinks To be 100 percent sure that Google sees, crawls and passes link juice, you should follow the clear recommendation of implementing internal links shared at Google I/O Conference 2018.

Source: YouTube If you rely on server-side rendering methods, you need to check if the HTML of a prerendered version of a page contains all the links that you expect. In other words, if it has the same navigation as your client-side rendered version. Otherwise, Google will not see the internal linking between pages. Critical areas where you may have problems is facet navigation, pagination, and the main menu. 3. Metadata Metadata should not be dependent on JS at all. Google says that if you load the canonical tag with JavaScript they probably will not see this in the first wave of indexing and they will not re-check this element in the second wave. As a result, the canonical signals might be ignored.

While testing the staging site, always check if an SSR version has the canonical tag in the head section. If yes, confirm that the canonical tag is the correct one. A rule of thumb is always sending consistent signals to the search engine whether you use client or server-side rendering. While checking the website, always verify if both CSR and SSR versions have the same titles, descriptions and robots instructions. 4. Structured data Structured data helps the search engine to better understand the content of your website. Before launching the new website make sure that the SSR version of your website displays all the elements that you want to mark with structured data and if the markups are included in the prerendered version. For example, if you want to add markups to the breadcrumbs navigation. In the first step, check if the breadcrumbs are displayed on the SSR version. In the second step, run the test in Rich Results Tester to see if the markups are valid. 5. Lazy loading My observations show that modern websites love loading images and content (e.g. products) with lazy loading. The additional elements are loaded on a scroll event. Perhaps it might be a nice feature for users, but Googlebot can’t scroll, so as a consequence these items will not be discovered. Seeing that so many webmasters are having problems with lazy loading in an SEO-friendly way, Google published a guideline for the best practices of lazy loading. If you want to load images on a scroll, make sure you support paginated loading. This means that if you scroll, the URLs should change (e.g., by adding the pagination identifiers: ?page=2, ?page=3, etc.) and most importantly, the URLs are updated with the proper content, for example by using History API. Do not forget about adding rel=”prev” and rel=”next” markups in the head section to indicate the sequence of the pages. Snapshot generation and cache settingsIf you decided to create a snapshot for search engine crawlers, you need to monitor a few additional things. You must check if the snapshot is an exact copy of the client-side rendered version of your website. You can’t load additional content or links that are not visible to a standard user, because it might be assessed as cloaking. If the process of creating snapshots is not efficient e.g. your pages are very heavy and your server is not that fast, it may result in creating broken snapshots. As a result, you will serve e.g. partially rendered pages to the crawler. There are some situations when the rendering infrastructure must work at high-speeds, such as Black Friday when you want to update the prices very quickly. You should test the rendering in extreme conditions and see how much time it takes to update a given number of pages. The last thing is caching. Setting the cache properly is something that will help you to maintain efficiency because many pages might be quickly served directly from the memory. However, if you do not plan the caching correctly, Google may receive stale content. MonitoringMonitoring post-migration is a natural step. However, in the case of moving to a JS framework, sometimes there is an additional thing to monitor and optimize. Moving to a JS framework may affect web performance. In many cases, the payload increases which may result in longer loading times, especially for mobile users. A good practice is monitoring how your users perceive the performance of the website and compare the data before and after migration. To do so you can use Chrome User Experience Report.

Source: Google It will provide information if the Real User Metrics have changed over time. You should always aim at improving them and loading the website as fast as possible. SummaryMigration is always a risky process and you can’t be sure of the results. The risks might be mitigated if you plan the whole process in detail. In the case of all types of migrations, planning is as important as the execution. If you take part in the migration to the JS framework, you need to deal with additional complexity. You need to make additional decisions and you need to verify additional things. However, as web development trends continue to head in the direction of using JavaScript more and more, you should be prepared that sooner or later you will need to face a JS migration. Good luck! Opinions expressed in this article are those of the guest author and not necessarily Search Engine Land. Staff authors are listed here. About The AuthorMaria Cieslak is a Senior Technical SEO Consultant at Elephate, the "Best Small SEO Agency" in Europe. Her day to day involves creating and executing SEO strategies for large international structures and pursuing her interest in modern websites built with JavaScript frameworks. Maria has been a guest speaker at SEO conferences in Europe, including 2018's SMX London, where she has spoken on a wide range of subjects, including technical SEO and JavaScript. If you are interested in more information on this subject, you should check out Elephate's " Ultimate Guide to JavaScript SEO". SEO via Search Engine Land https://ift.tt/1BDlNnc November 30, 2018 at 06:30AM

https://ift.tt/2ABrYdT

Search Engine Land was mistakenly removed from the Google Index https://ift.tt/2TYC0yt Google’s system misidentified our site as being hacked and thus was removed from the Google index for a period of time this morning. Google has reversed this and our site should return to Google’s search results shortly. Please visit Search Engine Land for the full article. SEO via Search Engine Land https://ift.tt/1BDlNnc November 30, 2018 at 06:21AM

https://ift.tt/2zsgjOL

I Don't Know Why Search Engine Land Is Not Currently Indexed In Google https://ift.tt/2RuflbT I woke up this morning to probably close to a hundred messages across email, social media and other channels that Search Engine Land is not currently in the Google Index. People want to know why. I honestly do not know why. I do not have access to the code base or Google Search Console access. SEO via Search Engine Roundtable https://ift.tt/1sYxUD0 November 30, 2018 at 05:54AM |

Categories

All

Archives

November 2020

|

RSS Feed

RSS Feed