|

http://bit.ly/2UIG8qy

15 A/B Testing Mistakes to Avoid http://bit.ly/2XmnhhJ

You’re one of those people, aren’t you? The kind who stay up late at night, thinking of ways to improve their businesses. It’s great to have this much passion. While the general concept of A/B testing is very simple, it’s fairly difficult to run a conversion rate optimization campaign properly. There’s a reason why conversion experts get paid a ton. Too often, marketers and business owners run a split test, make a change based on the results, and find out that it either made no difference or produced a negative result. That being said, you can definitely run split tests on your own and get great results. However, you need to make sure that you set up your test correctly and that you know how to interpret the results. I know they sound like simple things, but most split testers make several mistakes that lead to subpar results. In this post, I’m going to show you 15 common A/B test mistakes. After you understand these, you’ll be able to run split tests with more confidence and steadily improve your conversion rates. Let’s dive in. 1. Expecting CRO To Be A Silver BulletAs awesome as CRO is, it’s not a silver bullet that solves every problem for your business. Sometimes the problem lies deeper than surface conversions because the problem is an underlying flaw within your business. Let’s say, for example, that you start a SaaS business that provides a loyalty program for eCommerce stores. You expect it to be a hit, so after building the product, you release it to the public and wait for the sales to flood in. Two months later, the floodgates are still closed, and you’re left wondering what’s going on. Maybe CRO will help! You start reading CRO articles and stumble upon this guide at Quick Sprout. Thinking CRO will solve your problems, you dig in, read everything you can, come up with some hypotheses for testing, and then begin your first test. Surely CRO will solve everything and get you back onto the right track. In some cases, this may work because the problem could be that you’re not explaining what you do well enough which is costing you sales. But sometimes that’s not the case. In other cases, there’s a bigger problem than convincing more people to sign up. It’s possible that people aren’t interested in what you’re selling because there’s not a product market fit, i.e. there’s just not a demand for your offering. In situations like this, you either need to pivot and create a new product or else find out how to tweak your current product so it matches what customers want. There’s still hope for your business, but you need to do more than CRO to get back on track. So how do you know which case matches your business? First, you need to pay attention to whether or not people are signing up, using your service, or buying your product. When you sell to someone in person, do they sign up and use what you’re selling? If yes, then there’s a good chance that there’s a demand for what you’re selling. Usually, if you can sell the product in person then you can find way to sell it online. You just need to find out how to duplicate your offline sales pitch for the online world. Another test you can run is to see how many people would be disappointed without your service. Sean Ellis, the founder of Qualaroo and the first marketer at Dropbox, Lookout, Xobni, LogMeIn, and Uproar, believes you’ve found product market fit when 40% of your customers would be very disappointed without it. This number is somewhat arbitrary, but it’s a number he’s found to hold true after looking at almost 100 different startups. You can find out what this number is for your customers by conducting a survey to see whether or not they would be disappointed if your business closed its doors. There’s a good chance you have a viable product if at least 40% of customers would be very disappointed without your offering.

Sean Ellis is the founder of Qualaroo and was the first marketer at Dropbox, Lookout, Xobni, LogMeIn, and Uproar. These two tests will help you determine whether or not conversion rate optimization can help your business. If you do have a product market fit for what you’re selling, then conversion rate optimization can help. If not, you’re better off tweaking your product or improving your business model before worrying too much about how to increase conversion rates. 2. Running Before And After TestsSometimes it’s tempting to run a before and after test, even when you’ve been warned not to. With a before and after test, you measure conversions on your site for a period of time, make a change, and then measure conversions for another period of time. Instead of simultaneously testing two or more versions, you test different versions for different periods of time. As we’ve mentioned before, this is a bad idea because traffic quality varies from day to day and week to week. It’s not uncommon for a page to convert at 15% one day, 18% the next, and 12% the day after. It’s also not uncommon for a page to convert at 15% for one week and 18% the following week. These changes are common based on the moods that visitors may be in, the economic climate, the quality of traffic, or any number of other factors.

Changes in traffic quality will impact conversion rates which is why you need to remember to run A/B tests, not before and after tests. For example, your site might get covered by Technorati which increases traffic but decreases conversion rates. A large number of people visit your site, but they’re not as qualified as someone who clicks through from a Google ad. Thus, your conversion rate gets watered down, and if you tested a new version one week to the next in this situation, the results would be affected. The only way to account for all these factors is to run a scientific A/B or multivariate test where each version is shown to a proportionate number of customers throughout the testing period. By randomly showing the two (or more) versions to visitors in the same week, there’s a much greater likelihood that results will be statistically relevant. This is why it’s important that you never run a before and after test. There’s no way to know for sure how relevant the results are which means you have no way to make an educated decision about which version is the best. You always need to run an A/B, multivariate, or split test to get the most accurate results and to make decisions that will benefit your business. 3. Ending your tests too soonIdeally, anyone conducting split tests should have at least a basic understanding of statistics. If you don’t understand concepts such as variance, you’re likely making many mistakes. If you need an introduction, take the free statistic classes at Khan Academy. At this point, I’ll assume you have the basics down. Although many marketers have a good grasp of the basics, they still frequently make one mistake: ending the test without a sufficient sample size. There are three reasons for this:

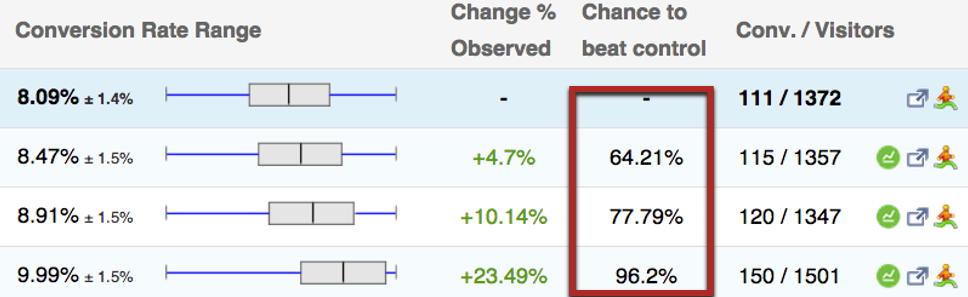

The first and third can be fixed. If you’ve ever used a tool such as Unbounce to conduct split tests, you know the analytics show you something like this:

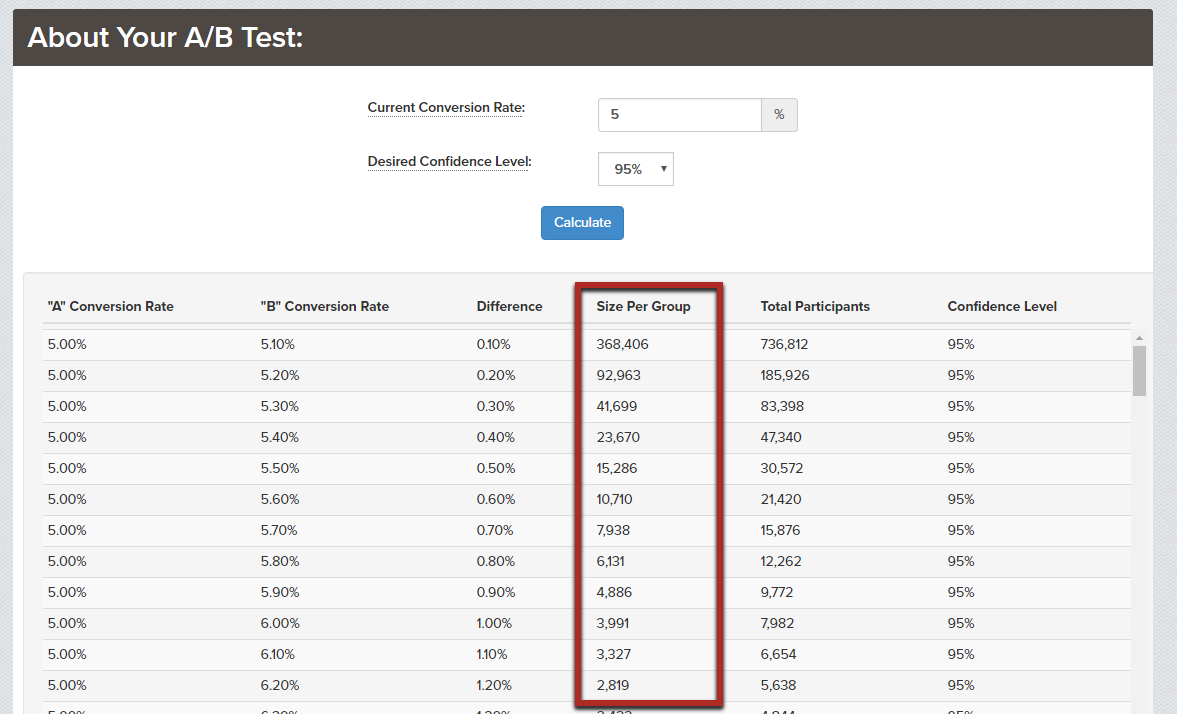

The table gives you the current conversion rate of each page you’re testing and a confidence level that the winning one is indeed the best. The standard advice is to cut the test off once you hit 90-95% significance, which is fine advice. The problem is that a lot (not all) of these tools will give you these significance values before they even mean anything. You’ll think you have a winner, but if you let the test run on, you might find that the opposite is true. Keep in mind that conversion experts like Peep Laja aim for at least 350 conversions per page in most cases (unless there’s a huge difference in conversion rates). You must understand sample size: The fix for this problem, and most sample size problems, is to understand how to calculate a valid sample size on your own. It’s not very hard if you use the right tools. Let me show you a few you can use. The first is the test significance calculator. It’s very simple to use: just input your base conversion rate (of your original page) as well as your desired confidence level (90-95%).

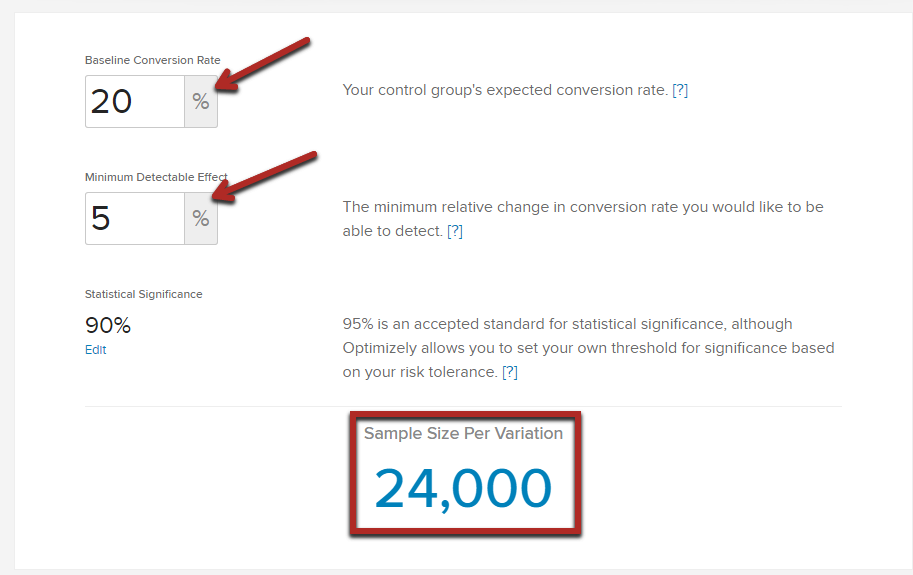

The tool comes up with a chart that has a ton of scenarios. You can see that the bigger the gap between the “A” page and the “B” page, the smaller the sample size needs to be. That’s why it’s best to test things that could potentially make big differences—they speed it up too. Here’s another sample size calculator you can use. Again, you put in your baseline conversion rate, but this time, you decide on the minimum detectable effect.

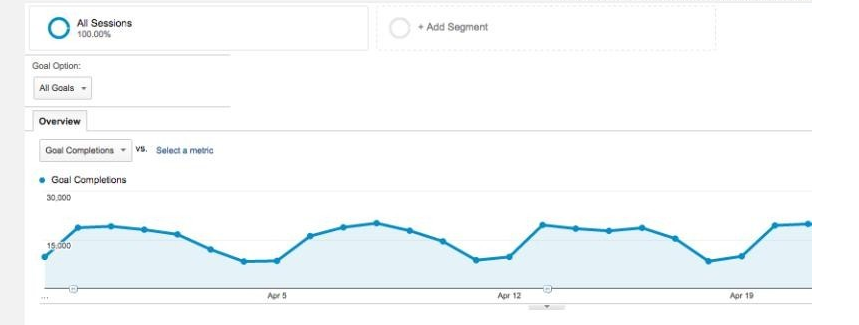

The minimum detectable effect here is relative to your baseline, so start by multiplying them together to get 1%. What that means is that you will have 90% confidence that you’ve detected a conversion rate on your second page that is under 19% or over 21% (plus or minus 1% from the 20% of your baseline conversion rate). That also means that if your split test results show a 20.5% conversion rate for your second page, you cannot confidently say that it’s better. Use either of these calculators to get an idea of what sample size you need for your tests. More is always better. 4. You didn’t test long enoughNo, this is not the same as testing until you reach statistical significance. Instead, it’s about the absolute length of time that you run your split test for. Say, you used one of the calculators I showed you and found that you need a minimum sample size of 10,000 views for each page. If you run a high traffic site, you might be able to get that much traffic in a day or two. Split test finished, right? That’s what most split testers do, and it’s wrong. All businesses have business cycles. It’s why your website’s traffic varies from day to day and even from month to month.

For some businesses, buyers are ready to go at the start of the week. For others, they largely wait until the end of the week so that they can get started on the weekend. It’s not valid to say that buyers who buy at one part of the cycle are the same as buyers at another part. Instead, you need an overall representation of your customers, through all parts of the cycle. Your first step here is to determine what your business cycle is. The most common lengths are 1 week and 1 month. To determine it, look at where your sales typically peak. The distance between your peaks is one cycle. Next, run your split tests until you (1) reach the minimum sample size and (2) complete an integer of your business cycle, e.g., one, two, or three full business cycles—but never 1.5. That’s the best way to ensure that you have a representative sample. 5. Trusting What You ReadAnother mistake you can make is trusting what you read online and blindly implementing someone else’s test results on your site. Maybe another site changed their button copy and increased conversions by 28%. That’s great, but there’s no guarantee you’ll get the same results on your site. You might stumble on a post about the Performable test we mentioned previously where conversions went up 21% by changing the button color from green to red. Assuming that green is the magical conversion color, you decide to make the same change on your site, but without testing. Unbeknownst to you, changing the button color decreases conversions by 15%, but because you didn’t test, you’ll never know.

There are a lot of different factors that go into why a change works on a site. Maybe a site’s customers are looking for something in particular, or maybe that certain color contrasts with the site’s primary color, draws attention to the CTA, and gets people to take action. Who knows. All you know is whether or not something works after you run a test, but if you don’t test, you could end up implementing someone else’s result only to shoot yourself in the foot when you accidentally decrease conversions. Another problem, which we touched on before, is that the results could be reported inaccurately or the test could have been run improperly. When you read a test on another site, there’s no way to know for sure that it was run to a 95% confidence level or higher unless that gets reported, and even then, you can’t know with 100% certainty. The only way to know absolutely for sure is to test the change yourself to see how it impacts your site. 6. Expecting Big Wins From Small ChangesYet another big mistake people make is expecting big wins from small changes. They might add one line of copy to a page or only test button copy or headline changes. All of these are great things to test, but frequently, they’ll only get you so far. In many cases, it’s best to test radical changes to see how conversions are impacted. Then, once you’ve come up with a variation that significantly increases conversions, you can continue tweaking to inch conversions up even higher. But if you only tweak your site, you’ll never dramatically increase conversions which means you’ll never find a new baseline you can work from before testing additional tweaks. The tests run on Crazy Egg are a great example of this. The first big win came from changing the homepage to a long-copy sales letter. Then, after a big win from a drastic change, the Crazy Egg team tested call-to-action buttons and other small changes to improve the results even more.

Now this isn’t to say that you shouldn’t ever run small tests. If you’re just getting started, headlines and button copy are great places to start. But if you’ve already practiced with those types of test and you have a pretty good idea what you’re doing, you may want to try mixing things up a bit and test an entirely new version of your homepage because you have a better chance of getting a big win from a drastic change than from a small tweak. 7. Thinking The First Step Is To Come Up With A TestWe can’t stress enough how important it is to gather data before coming up with your first test. Yes, you start with a test, and maybe, if you know enough about your site and customers already, you’ll end up getting lucky and improve conversions. But this just isn’t the best route to take. It’s much better to survey your customers first because you aren’t the one purchasing what you’re selling. Your customers are the ones who will pay for your offering. That’s why you need to find out what they think about your product and what hurdles they mention about why they aren’t ready to buy.

Good questions to ask at this point include:

These types of questions will teach you more about your customers and will reveal what’s preventing them from buying. Once you gather the data, analyze it, and come up with a hypothesis, then you’re ready to run a test and measure the results. 8. Running Too Many TestsIt’s easy to make the mistake of running too many tests. Maybe you get excited about A/B testing, see a lot of opportunities on your site, and run 20 tests in the first month. Even if you have enough traffic for that many tests, it’s not recommended. The reason it’s not recommended is that it takes time to gather data, analyze the data, run a test, measure the results, and then decide what to do next. It’s ok to run back to back tests in some cases, but you don’t want to run too many tests in too short of a period of time. The main issue with this is that every test is an opportunity to decrease conversions and therefore decrease revenue. The hope is that you’ll get a conversion boost, but that’s not always the case. And since it’s not always the case, you run the risk of decreasing revenue with the tests you run, meaning if you run too many tests, you can significantly impact your revenue stream. It’s much better to take your time to gather sufficient data, analyze the results, and then run educated tests based on the data you gathered than to quickly burn through a lot of tests that may or may not benefit your bottom line.

Ten days later the challenging variation increased conversions by 25.18% proving that the initial sample size was too small to produce statistically significant results. 9. Testing Too Many VariablesIt’s very possible to test too many variables at once. If you test too many things at one time, you won’t know what’s affecting conversions and may miss out on a positive improvement. You might change the headline, the button copy, and add a testimonial and then be disappointed with the results when one of the changes may have improved conversions on its own (such as the testimonial) while another change drags the conversions down (possibly the headline). Timothy Sykes had this experience recently with his sales letter. At one point he changed his video, headline, copy, and the form field design. This lowered conversions and didn’t give him any idea whether or not some of the changes were worth implementing.

Testing too many variables at once can leave you scratching your head wondering which changes improved or decreased conversions and which ones should be implemented. So on the one hand, sometimes you need to test radical changes to see if it improves conversions, but on the other hand, you want to be careful about testing too many variables too frequently because you won’t find out what did and didn’t improve conversions. 10. Testing Micro-ConversionsThe next mistake CRO professionals make is testing micro-conversions. This means that instead of measuring your end goal, you measure the number of conversions at an earlier step in this process. An example of this would be measuring the number of people who click on the 15-day free trial link on a site like Help Scout. It’s great if more people click on the link, but what really matters is for more people to fill out the form and to actually sign up for a free trial.

Just because more people click on a free trial link doesn’t mean more people will sign up. That’s why you need to measure both micro and macro conversions to make sure you’re accomplishing the goal you set out to accomplish. The problem with measuring micro-conversions, which is something we touched on briefly earlier in this guide, is that you never know how a micro-conversion will affect the end goal for your product. Changing a headline could get 10% more people to click on a free-trial link, but it could lead to a smaller percentage of people signing up for a free trial. It’s entirely possible that the headline tricks people into clicking somehow but annoys them once they get to the free trial form. In a case like this, it doesn’t matter that more people take the next step if they’re not completing the goal you really want them to complete. With that said, quite often it’s good to improve micro-conversions. You definitely want more people to go from step one to step two because the more people there are on step two means there are more people who are further along in your funnel and who may convert. But the point is that you can’t completely trust these numbers. You also need to measure conversions for your final goal so you can be sure that the increased micro-conversions are always improving the macro-conversion that matters the most for your business. 11. Not Committing To Testing EverythingOne of the biggest mistakes you can make is not testing every change you make on your site. You may think that rearranging the homepage or swapping out a current picture for a new one won’t affect conversions, but there’s a good chance that it will. The only way to find out is to run a test. Sometimes a CEO wants something to be changed and doesn’t care that much about conversions. He’s convinced that it will help the business, so he wants an image or feature added. And if your business hasn’t committed to testing as an organization and if you don’t have buy in at every level, then you may not have any choice but to add the extra feature. But in another scenario, you do have an option. This is the scenario in which the entire organization is on board with testing and understands how important it is. Everyone from the CEO to the Communications department understands how valuable CRO is and how every little change can affect conversions. At this point, you’re committed to testing, and you know you can’t make any changes without running an A/B test first to see how it impacts sales. Once you have this level of commitment to testing, you’ll be assured that you won’t make the mistake of implementing changes without testing first. 12. Not segmenting your trafficWhen it comes to split tests, the more control you have, the better. Whenever you do any sort of analysis on your website, whether for split tests or not, you need to understand and consider segments. A segment refers to any section of your traffic. The most common segments are:

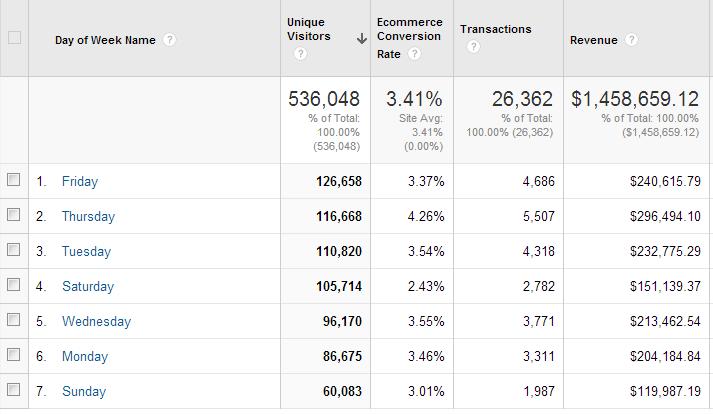

Here is data broken down by the days of the week:

For example, if you have 100 visitors who use Chrome and 100 visitors who use Internet Explorer, there’s a good chance that they will act differently. So, imagine if you sent the majority of Chrome users to one landing page and the majority of the IE users to a different landing page. Can you fairly compare the results? No way. You might arrive at the opposite conclusion if you reverse the test. The practicality of segmenting: Ideally, all your visitors sent to each version of your test would be identical. In reality, that’s impossible. Having a large enough random sample size (more on that later) will mitigate a lot of your issues, but you will likely end up judging tests that aren’t perfectly segmented. The reason for this is because you can’t perfectly segment a test in the vast majority of situations. If you did, you’d be left with barely any traffic. For example, how many users would tick all these boxes?

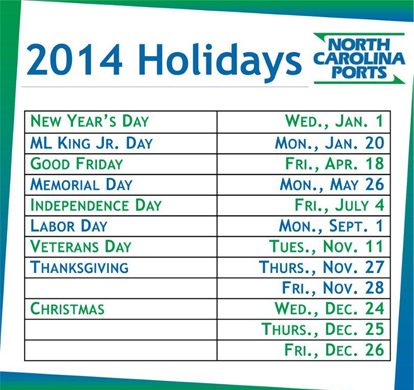

Even if your site has a lot of traffic, you won’t have many users matching all the parameters—certainly not a big enough sample to run a test on. The solution is compromise. Right now, I want you to identify the segments that have the biggest influence on your results. For most sites, that will come down to the traffic source and, possibly, the location. When you’re analyzing your split test results, make sure you’re analyzing the results only for users from a specific traffic source (e.g., organic search, paid advertising, referral, etc.). 13. Running a split test during the holidaysThis is related to segmenting, but it’s an often-overlooked special case. Your traffic during holidays can be very different from your typical traffic. Including even one day of that abnormal traffic could result in optimizing your site for the people who use your site only a few times a year.

You also have to consider other special days that influence the type of traffic driven to your site:

On top of that, these special days aren’t usually one-day things. For example, when it comes to Christmas, those abnormal types of visitors may visit your website leading up to the big day and a week or two after. The solution? Go longer: The best solution is to exclude these days from your test because they will contain skewed data. If it’s not possible, the next best solution is to extend your split tests. Go over your minimum sample size so that you have enough data to drown out any skewed data. 14. Launching a new designI know I said this post only contained seven blunders, but I had to throw in an eighth one… The biggest blunder you can make is to redesign your website because your design is outdated. I’m a big believer that you should never just redesign things, but instead you should continually tweak and test your design until it’s to your customers’ liking. At the end of the day, it doesn’t matter what you think of your design. All that matters is that it converts well. If you jump the gun and just change things up because you want to, you can drastically hurt your revenue. Similar to the example in blunder Blunder #6, Tim Sykes also launched a new design because he wanted something fresh. Within hours of launching the design, he noticed that his revenue tanked. There was nothing wrong with the code; everything seemed to work; but the design wasn’t performing well. So he had no choice but to revert back to the old design. You have a website to gain business from. Creating a new design won’t always help boost your revenue, so continually tweak and test elements instead of just redoing your whole design at once. 15. Measuring the wrong thingAlthough it’s in the title, it’s still easy to overlook. Conversion rate optimization is all about…conversions. Whenever possible, you need to be measuring conversions—not bounce rates, email opt-in rates, or email open rates. Those other numbers do not necessarily indicated an improvement. Here is a simple example to illustrate this:

If you were measuring only your email opt-in rate on the page, you’d say that page A is decisively better (66% better). In reality, page B converts traffic twice as well as page A. I’ll take twice the profit over 66% more emails on my list any day. This is another simple thing, but you need to keep it in mind when setting up any split test. ConclusionIf you’ve started split testing pages on your website or plan to in the future, that’s great. You can get big improvements, leading to incredible growth in your profit. But if you’re going to do split testing without the help of an expert, you need to be extra careful not to make mistakes. I’ve shown you 15 common ways that people mess up their split tests, but there are many more. Any single one of these can invalidate your results, which may lead you to mistakenly declaring the wrong page as the winner. You’ll end up wasting your time and even hurting your business sometimes. Even if you get a good return from split testing, it might not be as much as it could be. It’s ok if you make mistakes while you optimize your site for conversions. As long as you learn from your mistakes and avoid making the same ones over again, you’ll be fine. Social Media via Quick Sprout http://bit.ly/UU7LJr April 18, 2019 at 09:19AM

0 Comments

Leave a Reply. |

�

Amazing WeightLossCategories

All

Archives

November 2020

|

The important thing to know about segments is that you cannot compare visitors from two different segments.

The important thing to know about segments is that you cannot compare visitors from two different segments.

RSS Feed

RSS Feed